Cloud Computing

Cloud Computing

Introduction to Cloud World:

Definition of Cloud Computing

- General : Cloud refers to something which you don’t know exactly the physical location Cloud is something that is remote and can be accessed through the Internet.

- Scientific : Delivering computing resources as services that can be accessed any time over a network most properly Internet.

- NIST : Cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction.

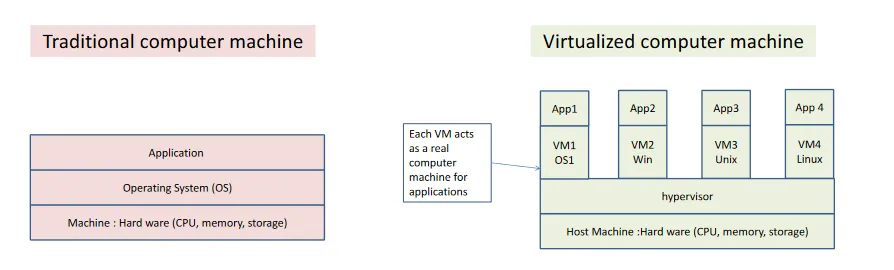

Traditional Computing

- Pros:

- Privacy

- Pay One Time

- Cons

- Scalability : How to scale up or scale down your computing resources

- Coast : Devices may be ideal (not used) for a long time but consuming power and passing working hour

- Complexity: of owning and maintaining their own IT infrastructure

Cloud Computing

- Pros:

- Rabid development

- Pay as you go

- Reduce cost

- Scalability

- Reliability

- Accessibility

- Cons

- Data Loss : Loss of data and services if you are not connected to the Internet

- Security Issues : Potential privacy and security risks of putting valuable data on someone else’s system in an unknown location

- Unstable : What happens if your supplier suddenly decides to stop supporting a product or system you’ve come to depend on?

Comparison Traditional Vs Cloud Computing

| Traditional Computing | Cloud Computing | |

| Acquisition Model | Buy assets Build Technical Infrastructure | Buy Service Infrastructure Included |

| Business Model | Pay for Assets Administrative Overhead | Pay for Use Reduced Admin Function |

| Access Model | Internal Networks Corporate Desktop | Over the Internet Any device |

| Management Model | Single-tenant non-shared Static | Multi-tenant Scalable Elastic Dynamic |

| Delivery Model | Costly Lengthy Deployment time Land & Expand Difficulties | Reduce Deployment time Fast ROI |

Cloud Computing Architecture :

Fundamental Concepts

- Allows users to get the computing functionality without having the software and the hardware.

- Everything is done by remote, nothing is saved locally.

Challenges

- Availability of service: what happens when the service provider cannot deliver?

- Data confidentiality and auditability, a serious problem.

- Diversity of services, data organization, user interfaces available at different service providers limit user mobility; once a customer is hooked to one provider it is hard to move to another.

- Data transfer bottleneck; many applications are data-intensive .

Main Technologies and Blocks

- Virtualization: Virtualization is a technique that allows sharing of one physical instance of an application or resource between multiple customers or organization

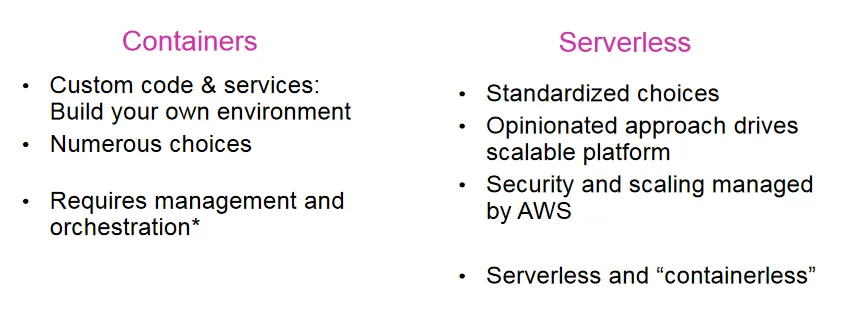

- Containers

- Service-Oriented Architecture: SOA is an application framework which takes business applications and divides them into separate business functions and processes called Services.

- Micro Services

- Separation of concerns, flexibility, re usability.

- Utility Computing: Utility computing is based on Pay-per-Use model. It provides computational resources on demand as a metered service. The consuming amount is calculated and the user pay his bill.

- Serverless computing

Characteristics of cloud computing

- On-demand self-service

- A consumer can request computing capabilities, such as server time and network storage, as needed automatically without requiring human interaction.

- Broad network access:

- Capabilities are available over the network and accessed through standard mechanisms that allow using heterogeneous machines(e.g., mobile phones, laptops, and personal digital assistants [PDAs]).

- Elastic resource pooling

- Consumers can scale up and scale down at any time.

- Pay as you go

- Consumers pay only for the resources that they used. When scaling up they pay more, when scaling down they pay less.

- Measured service

- Cloud systems automatically control resource use by leveraging a metering capability. (e.g., storage, processing, bandwidth, and active user accounts).

- Resource usage can be monitored, controlled, and reported.

Before Cloud Computing

- Cluster computing

- Definition : A cluster is a type of parallel or distributed processing system, which consists of a collection of interconnected stand-alone computers cooperatively working together as a single, integrated computing resource

- Techniques techniques are applied to the solution of computationally intensive applications across networks of computers are parallel Computing , distributed computing .

- Cluster applications: Applications that needs high computational power, such as weather modeling, airplanes simulations, science computation, digital Biology, nuclear simulations, image processing, data mining, and astrophysics

- Grid computing

- Definition : The grid is a hardware/software infrastructure that enables heterogeneous (The computer configurations are highly varied) geographically separated clusters of processors to be connected in a virtual environment.

| Cluster computing | Grid computing |

| Computing components are connected together using a fast Local Area Network, the devices are physically in the same place. | Devices such as desktops, laptops, and servers, are connected together to form a single network. They are physically separated and connected together using the Internet. |

Cloud Clients

- Web browser

- mobile app

- thin client

- terminal emulator

Cloud Computing Models

Cloud Deployment Models (CDM)

Deployment models describe the ways with which the cloud services can be deployed or made available to its customers, depending on the organizational structure and the provisioning location

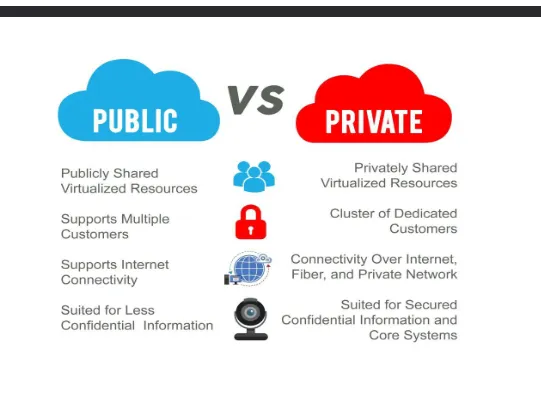

Public cloud

The cloud infrastructure is provisioned for open use by the general public clients.

- All hardware components exist on the premises of the cloud provider.

- All resources are shared between several consumers.

- Public clouds are the most popular cloud services that people often use.

- Public clouds offer many free services such as email, spreadsheets, and documents that make daily operations quick and easy.

- Any user can access the cloud and get benefit of it the user don’t know actually the location of the provides services. physical server him by that different services But he is sure that his work

- Advantages of public cloud • Low cost • High scalability • Pay as you go • No need to manage or maintain Infrastructure

Private cloud

In private cloud only one specific organization owns the private cloud. The private cloud infrastructure is provisioned for exclusive use by a single organization comprising multiple consumers (e.g., business units).

- computing resources are exclusive to a single organization or single tenant. (Company, government, University)

- Users can gain access to the private cloud through the company’s intranet or through a virtual private network (VPN).

- No hare of computing resources( Storage, memory, processor, applications)

- All the cloud services are all dedicated to single user (organization) The server can be hosted externally or on the premises of the owner company.

- Managing and controlling private cloud may be done internally by the organization or externally by provider.

- Types

- Hosted on-premises : This type of private cloud is hosted by a separate cloud service provider on-premises, the provider role is to manage and control the software and visualization that allows servers located at the customer side to operate as cloud environment.

- Externally Hosted (on- provider data center): The cloud is hosted in a provider data center, but the server is not shared with other organizations. The cloud service provider is responsible for configuring the network and maintaining the hardware for the private cloud, as well as keeping the path secured.

- Virtual private cloud:

The resources in a virtual private cloud exist in a walled-off area on a public cloud instead of

being hosted on-premises. The resources is located in an isolated virtual network.

- Manage network you can plan and manage the network that can access the private resources by customizing the IP address range.

- Subnet division You can divide the private IP address of VPC into several subnets using Vswitches, and custom access rules.

- Advantages

- Higher privacy and security

- Improved reliability

- More control over resources

- Disadvantages

- Higher cost

- Higher complexity for configuration and management

Hybrid cloud

It is IT infrastructure that connects at least one public cloud and at least one private cloud.

- It provides orchestration, management and application portability between them to create a single, flexible, optimal cloud environment for running a company’s computing workloads.

- Combines both public and private cloud Separating critical workloads from less-sensitive workloads.

- Benefits of Hybrid cloud:

- Separating critical workloads from less-sensitive workloads. You might store sensitive financial or customer information, and applications on your private cloud, and use a public cloud to run the rest of your enterprise applications

- Big data processing you could run some of your big data analytics using highly scalable public cloud resources, while also using a private cloud to ensure data security and keep sensitive big data behind your firewall

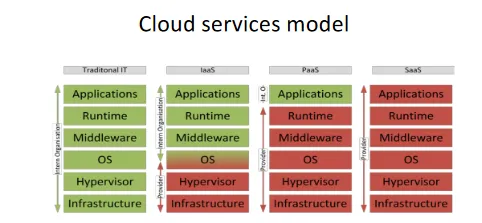

Cloud Service Models (CSM)

Red levels are run and operated by the service providers

- SaaS (Applications)

- Also referred to as “software on demand,”

- Service model involves outsourcing the infrastructure, platform, and software/applications.

- A cloud provider hosts applications and makes them available to end users over the internet. In this model, a software vendor (SV) may contract a cloud provider to host the application, and make it available for consumers.

- Users pay a subscription fee to gain access to the software functionality, which is a ready-made solution.

- The application will run on a single version and configuration across all customers.

- The customer accesses the functionality over the internet.

- Example : CRM, Email, virtual desktop, communication, games

- PaaS (Development environment)

- A service model that involves outsourcing the basic infrastructure and platform (Windows, Unix), and application development enviroment

- It is a complete development and deployment environment in the cloud, with resources that enable you to deliver everything from simple cloud-based apps to sophisticated, cloud-enabled enterprise applications.

- PaaS lets developers create applications using built-in software components reducing the amount of coding that developers must do. Analytics or business intelligence. Tools provided as a service with PaaS allow organizations to analyze and mine their data, and predicting outcomes to improve forecasting, product design decisions, investment returns and other business decisions

- PaaS facilitates deploying applications without the cost and complexity of buying and managing the underlying hardware and software where the applications are hosted.

- The customer code their own applications

- Example : Execution runtime, database, web server, development tools.

- laaS (Hardware)

- A service model that involves outsourcing the basic infrastructure used to support operations— including storage, hardware, servers, and networking components.

- The service provider owns the infrastructure equipment and is responsible for housing, running, and maintaining it. The customer typically pays on a per-use basis.

- The customer uses their own platform (Windows, Unix), and applications

- Example : Virtual machines, servers, storage, load balancers, network.

- Use Cases:

- Website hosting: Running websites using IaaS can be less expensive than traditional web hosting. Web apps. IaaS provides all the infrastructure to support web apps, including storage, web and application servers, and networking resources.

- High-performance computing: High-performance computing (HPC) on supercomputers, computer grids, or computer clusters helps solve complex problems involving millions of variables or calculations.

- Big data analysis: Big data is a popular term for massive data sets. Mining data to extract useful information needs huge amount of processing power, which IaaS economically provides

Inspection of Cloud Computing Technologies :

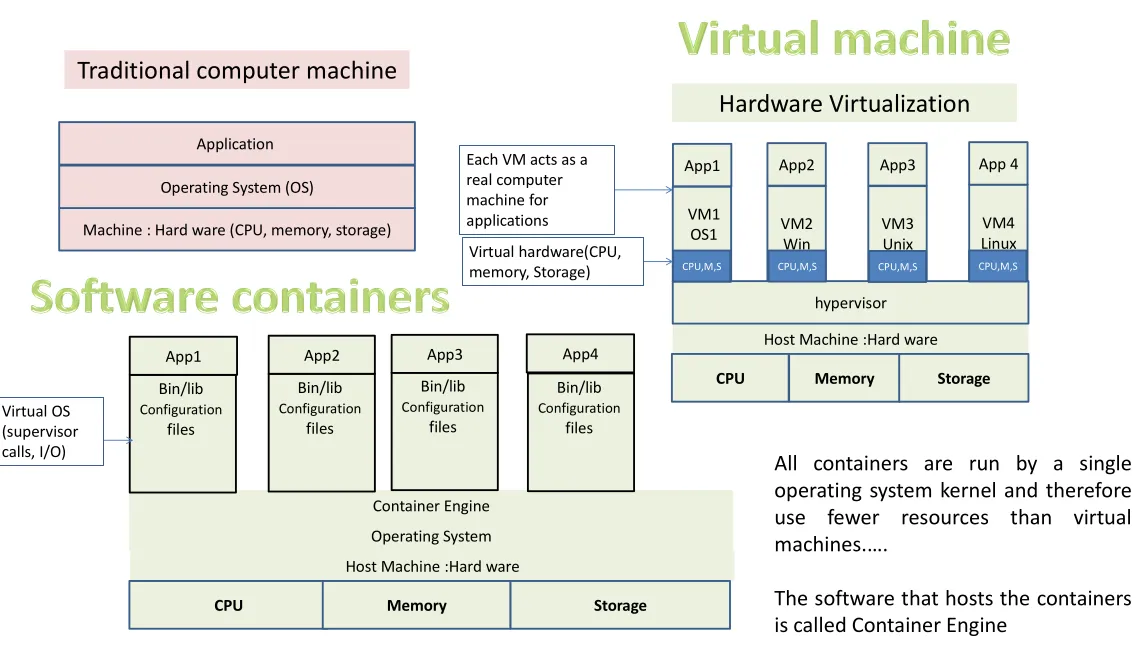

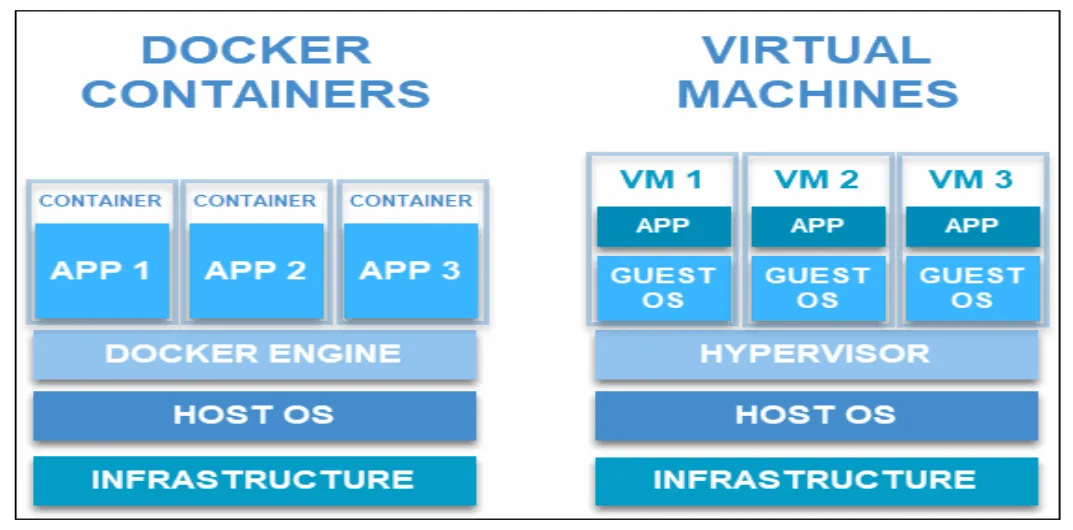

Virtualization

Idea

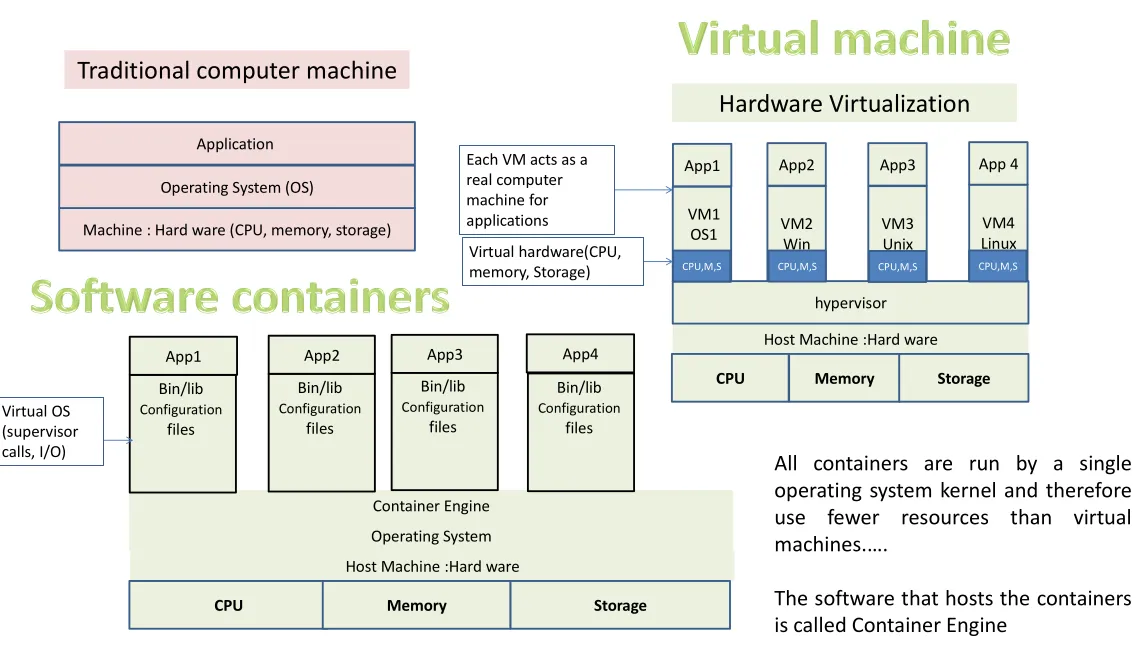

- A Virtual Machine (VM) is a compute resource that uses software instead of a physical computer to run programs. Each virtual machine runs its own operating system and functions separately from the others.

- Each virtual machine runs its own operating system and functions separately from the others.

- Virtual MacOS VM, and a Windows VM, and Unix VM can run on the same physical PC.

Definition

- Virtualization : is the creation of a virtual (rather than actual) version of something, such as a server, a desktop, a storage device, an operating system or network resources”. It is the process of creating a software base “virtual” computer machine from a real physical computer machine

Architecture

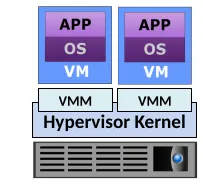

- HyperVisor Kernel

- Software that is installed on a compute system and enables multiple OSs to run concurrently on a physical compute system.

- Provides functionality similar to an OS kernel (Manage hardware)

- Designed to run multiple VMs concurrently

- Hypervisors provide an abstraction layer to separate the virtual machines from the system hardware.

- You can install a virtual machine with any operating system without having to worry about hardware platform.

- The hypervisor also separates virtual machines from each other. So, if one virtual machine is having issues, it does not affect the operation of the other virtual machines.

- Types Hypervisor is a program used to run and manage one or more virtual machines on a computer.

| Bare-metal Hypervisor | Hosted Hypervisor | |

| What it is | An operating system | Installed as an application on an OS |

| Installation | Installed on a bare-metal hardware | Relies on OS, running on physical machine for device support |

| Management | Manages hardware | Depends on the host OS for device support |

| Complexity | More complex in implementation | Less complex in implementation |

| Suitable for | Suitable for enterprise data centers and cloud infrastructure | Suitable for smaller scales and personal use |

| Products | VMware ESX Server Microsoft Hyper-V IBM POWER Hypervisor (PowerVM) Xen, Citrix XenServer Oracle VM Server AcD Acco | VMware Server VMware Workstation, VMware Fusion, Microsoft Virtual PC Oracle’s VirtualBox |

- Reference modules

- DISPATCHER: The dispatcher behaves like the entry point of the monitor and reroutes the instructions of the virtual machine instance to one of the other two modules.

- ALLOCATOR: The allocator is responsible for deciding the system resources to be provided to the virtual machine instance. It means whenever a virtual machine tries to execute an instruction that results in changing the machine resources associated with the virtual machine, the allocator is invoked by the dispatcher.

- INTERPRETER: The interpreter module consists of interpreter routines. These are executed, whenever a virtual machine executes a privileged instruction.

- Virtual machine manager (VMM)

- Abstracts hardware (split hardware)

- Each VM is assigned a VMM

- Each VMM gets a share of physical resources

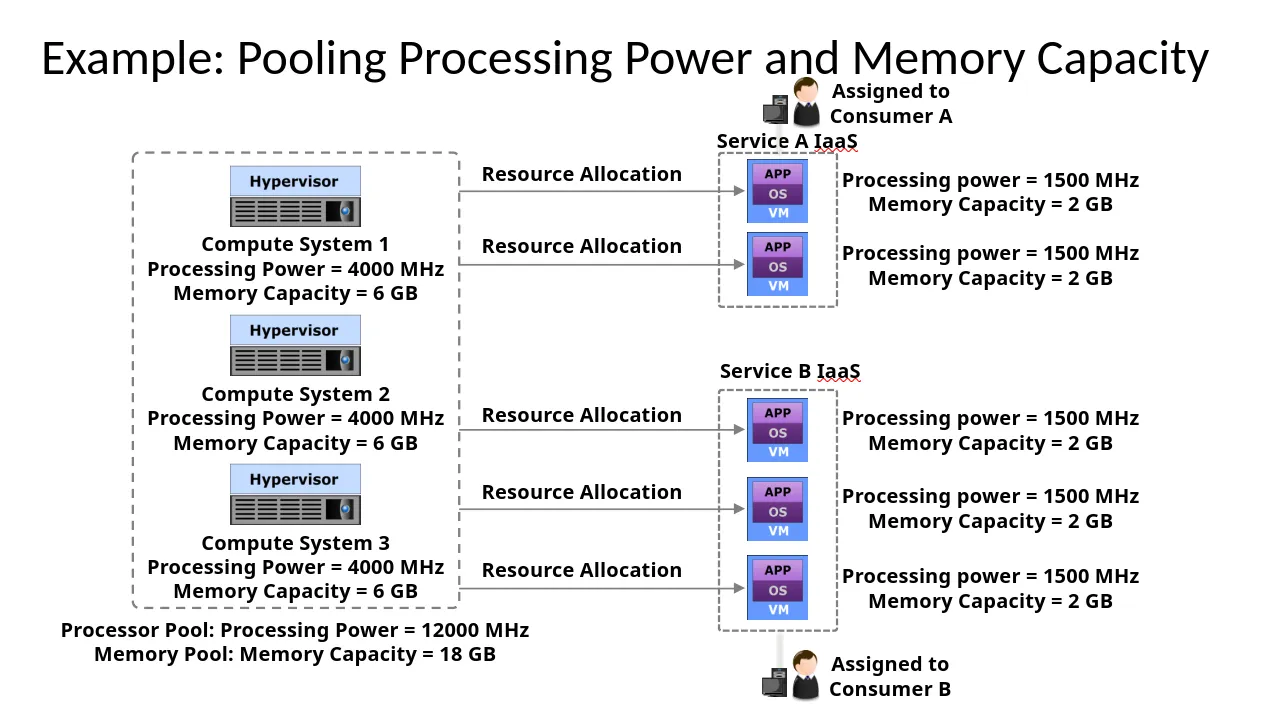

- Resource Pool

A logical abstraction of the aggregated computing resources, such as processing power, memory

capacity, storage, and network bandwidth that are managed collectively.

Cloud services obtain computing resources from resource pools

Resources are dynamically allocated as per consumer demand

Resource pools are sized according to service requirements

Cloud Benefits of Resources’ Virtualization

- Allow running several operating systems on the same server.

- Performance isolation

- as we can dynamically assign and account for resources across different applications

- System security:

- as it allows isolation of services running on the same hardware

- Isolating the execution of each VM from other VMs and from hosting OS.

- Reliability:

as it allows applications to migrate from one platform to another- Ability to Moving VM from one server to another As any VM is independent on the physical server that it runs on it, we can move a VM from one server to another to provide flexibility and reliability

- Ability to get a copy of VM file and store it VM are not hard ware, they are software files that can be copied and stored to avoiddata losses in case of failure

- Scalability :

- Scaling up by requiring more VMs or down by shutting down unused VMs.

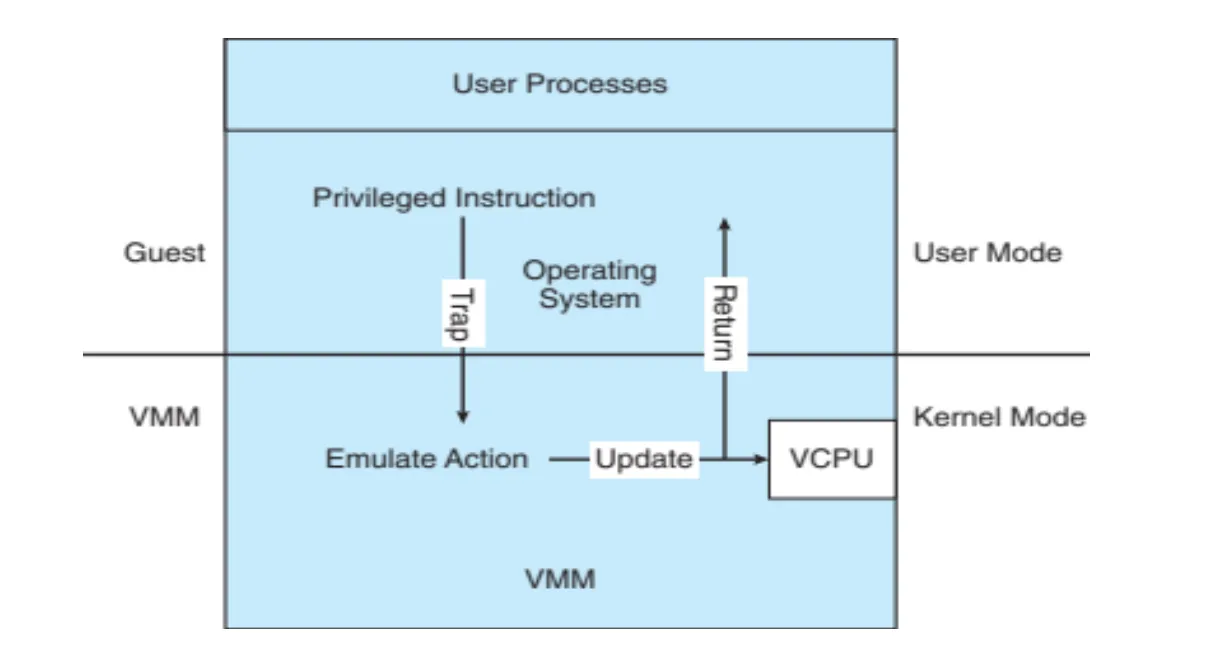

OS Management of Virtualization Process

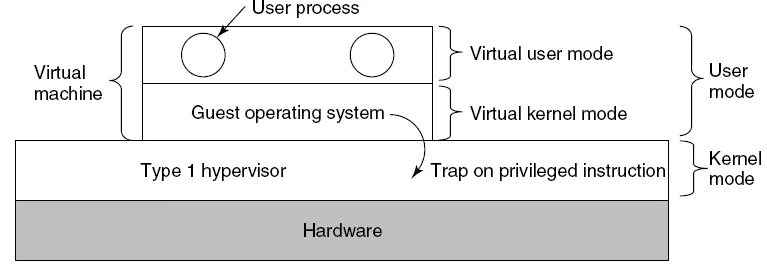

- Kernel Mode & User Mode Handeling:

- Uses Trap-And-Emulate

- Uses Trap-And-Emulate

- Building Block Dual mode CPU:

guest executes in user mode Kernel runs in kernel mode

Not safe to let guest kernel run in kernel mode too

So VM needs two modes – virtual user mode and virtual kernel mode

Both of which run in real user mode Actions in guest that usually cause switch to kernel mode must cause switch to virtual kernel mode

Virtualization Use Cases

- On-Cloud

- Azure

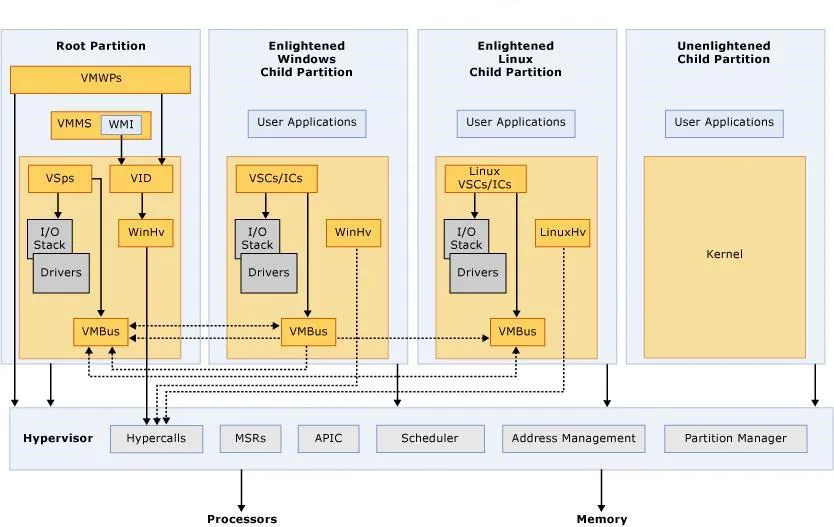

- Hypervisor – A layer of software that sits between the hardware and one or more operating systems. Its primary job is to provide isolated execution environments called partitions. The hypervisor controls and arbitrates access to the underlying hardware.

- Root Partition – (parent partition) Manages machine-level functions such as device drivers, power management, and device hot addition/removal. The root (or parent) partition is the only partition that has direct access to physical memory and devices.

- Child Partition – Partition that hosts a guest operating system - All access to physical memory and devices by a child partition is provided via the Virtual Machine Bus (VMBus) or the hypervisor.

- Azure

- On Prim

-

Hyper-V

-

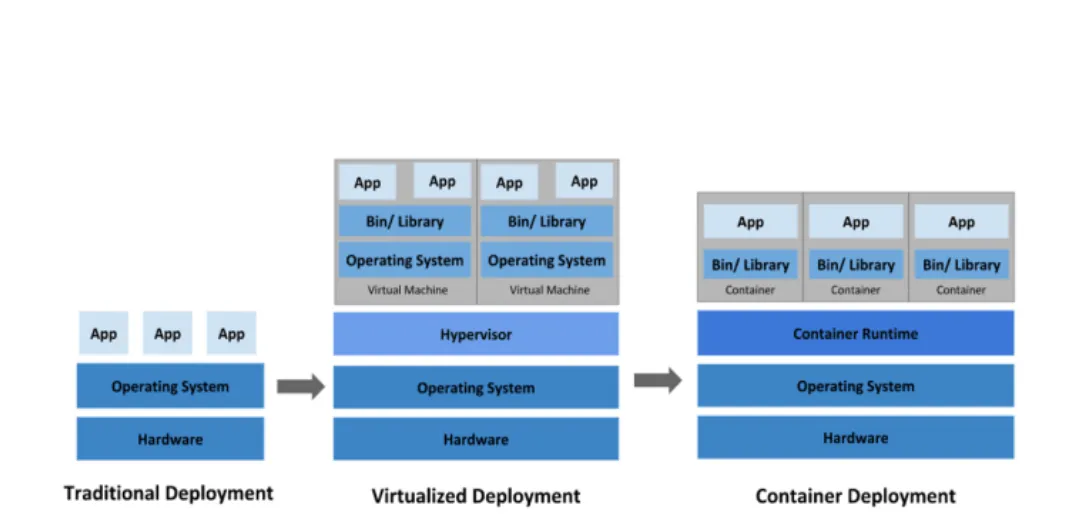

Virtualization Disadvantages

- Resource consuming especially in case two operating systems.

- Duplicating of OS version if two VM are running on the same operating system.

- Long time to initialize a VM and to start execution.

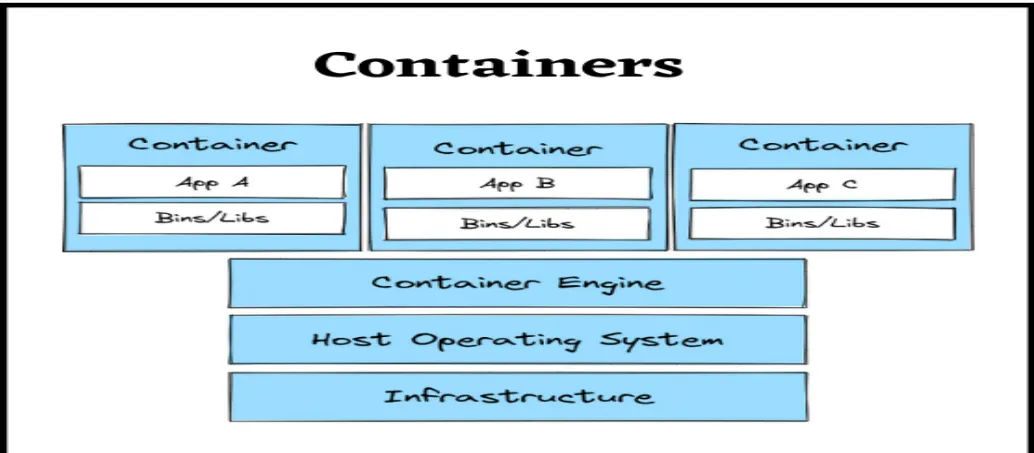

Containers

Software Containers are technologies that allow the packaging and isolation of applications with their entire runtime environment (all of the files necessary to run) This makes it easy to move the contained application between environments (development, test, production) while retaining full functionality.

Definition

- Containers are packages of software that contain all of the necessary elements to run in any environment. (binary code, library, configuration files) In this way, containers virtualize the operating system and run anywhere independent of operating system and infrastructure.

Key Notes

- Containers are a form of operating system virtualization. (does nor include OS)

- A single container might be used to run anything from a small software process to a larger application.

- Inside a container are all the necessary executable, binary code, libraries, and configuration files.

- Compared to server or machine virtualization approaches, however, containers do not contain operating system.

- This makes them more lightweight and portable, with significantly less overhead.

Benefits of Containers

- Portability (Cross Platform) Containers run in any environment independent of OS

- Cost

- No need to buy licenses

- Separation of concerns (developers focus on code and operators focus on configuring the environment)

- Efficiency

- Can be deployed to provider site to be executed there, several containers shares the same OS and hardware

- Scalability • Containers can be easily scaled up or down to meet demand.

Unix Sand Boxing

- Using namespaces they create separate sandboxes via separate process(PID).

- You can have another sandbox in a separate PID.

- By default, Linux does this isolation without installing a hypervisor. Inside this isolation box, we can grant our application. It’s very lightweight.

Architecture

- Runtime Engine (Virtual Kernel)

- interact with containers to perform system calls

- A container Runtime Engine(Daemons);

- software package that leverage specific features on a supported operating system to run the container, (interface between a container and an OS.

- The most popular runtime engines are Docker, Podman, containerd ,(CRI)CRI-O.

- Manage multiple things. They can manage networks, They can manage file systems, Manage multiple containers and volumes.

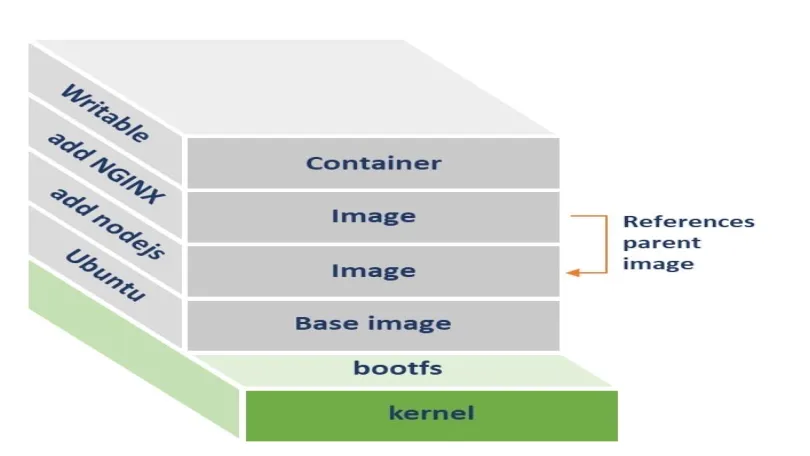

Images & Containers

- A software image is a lightweight, stand-alone, executable package that includes everything needed to run a piece of software, including the code, a runtime, libraries, and configuration files.

- A software container runs the included image. So a container is considered as an instance of image. It runs completely isolated from the host environment by default, only accessing host files and ports if configured to do so.

- Containers run apps natively on the host machine’s kernel. They have better performance characteristics than virtual machines that only get virtual access to host resources through a hypervisor

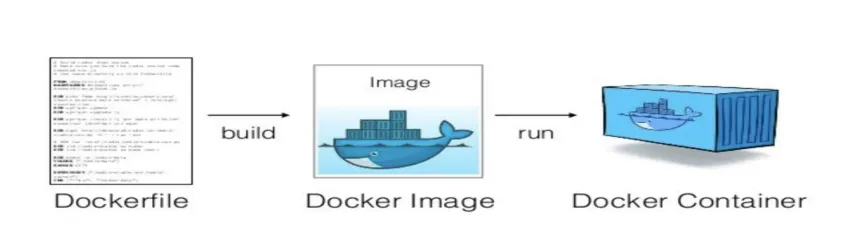

Docker

- ability to package and run an application in a loosely isolated environment called a container.

- isolation and security allow you to run many containers simultaneously on a given host.

-

Steps From Zero to run

- The container-runtime manager (typically containerd or CRI-O) downloads the image specified in the “FROM”instruction from its corresponding registry, in order to be used as a base image referred to as “layer 0” (since no explicit registry is defined, Docker Hub is used implicitly in this case)

- The base image is extended with upgraded packages and results in a new intermediate image composed of 2 layers (layer 0 and 1)

- The “layer 1 image” is extended with a new package and results in a second intermediate image composed of 3 layers (layer 0, layer 1 and layer 2) Since there is no more instruction in the Dockerfile, the second image containing all the layers is what becomes referred to as the “final image“.

- The final image is the output expected by the end user and corresponds to the image built out of the provided Dockerfile at

-

Structure

Docker containers are building blocks for applications. Each container is an image with a readable/writeable layer on top of a bunch of read-only layers. These layers (also called intermediate images) are generated when the commands inthe Dockerfile are executed during the Docker image build.

-

Docker File & Building Automation

-

Docker file

-

The building script that allows creating and managing an image and creating and running a container related to this imageIt

-

simple text file in which you can write Docker commands

-

simple Dockerfile can be used to generate a new image that includes the necessary content (which is a much cleaner solution than the bind mount above):

-

Manifest File File that describes how to deploy the container image, port, network

- Create Dockerrun.aws.json

- docker-compose.yaml

-

Syntax:

- FROM: Ubuntu 18.04.it :dockerfile that defines the parent image which Generates a layer based on (interface not a complete operating system)

- Pull: Adds files from Docker repository to the local machine

- ADD: copy files and directories from the host filesystem or Url into the Docker image.

- Build : Create an image from docker file

- RUN: Executes commands in the image during the build process.

- CMD: specifies which command should be executed within the container after building .

-

Steps

first declare where the dockerfile

simplidockerexampledocker build [location of your dockerfile] Now, by adding-tflag, the new image can be tagged with a name:docker build -t mysimpel_imageThe Docker image is created, you can verify by executing docker imagesmkdir simplidocker #create a directory named ‘simplidockerexample’ cd simplidockerexample #go to the directory touch Dockerfile #Create a docker file vi Dockerfile #Open the file -

Image from File

-

Example:

FROM nginx COPY static-html-directory /usr/share/nginx/html ## Place this file in the same directory as your directory of content ("static-html-directory"),FROM ubuntu 18.04 # Add layer of Ubonto apt-get update # Advanced package tools to getthe least update CMD [“echo”, “Welcome to Simplidockerexample”]FROM python:3.9 ADD [main.py](imgs/http://main.py/) # install any more requests CMD [“python”, “./main.py”]- Starting

docker build -t NewImageName docker run --name ImageName

-

-

-

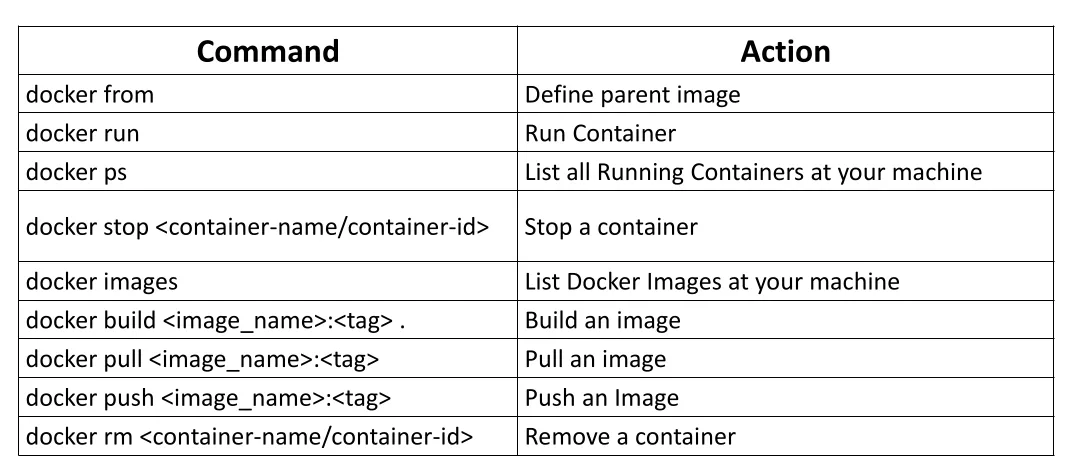

Docker Commands

-

Image to Container

docker pull imagedocker build/create imagedocker start con

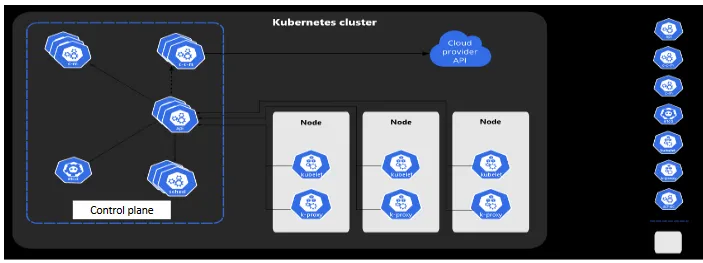

Kubernetes and Managing containers

In a production environment, you need to manage the containers that run the applications and ensure that there is no downtime.For example, if a container goes down, another container needs to start. Kubrnates provides this facilities?

-

DNS

- All Kubernetes clusters should have cluster DNS

- Cluster DNS provide DNS records for Kubernetes services.

-

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services

-

Kubernetes Componants:

-

Nodes : set of worker machines that run containerized applications and Every cluster has at least one worker node.

-

K8S as Example:

-

allows both configuration and automation of containers runtime.

-

Provide better support for microservices architectures Distributed applications and microservices can be more easily isolated, deployed, and scaled using individual container building blocks

-

Componants

-

Worker nodes

- host the Pods ( set of one or more running containers), that are the components of the application.

- Componants:

Node components run on every node, maintaining

running pods and providing the Kubernetes runtime

environment.

- kubelet

- The kubelet takes a set of Pods that are provided through various mechanisms and ensures that the containers described in those Pods are running and healthy.

- kube-proxy

- kube-proxy is a network proxy that runs on each node in your cluster.

- allows network communication to your Pods from network sessions inside or outside of your cluster

- kubelet

-

Control plane

- manages the worker nodes and the Pods in the cluster

- Control scheduling of nodes and pods for example, starting up a new pod or replicate an existing pod

- Componants

- kube-apiserver

- front end for the Kubernetes control plane.

- validates and configures data for pods, services, replication controllers, and others.

- kube-scheduler Control plane component that watches for newly created Pods with no assigned node , and selects a node for them to run on.

- kube-apiserver

-

Container Runtime

The container runtime is the software that is responsible for running containers. • Kubernetes supports several container runtimes: Docker, container, and CRI-O

-

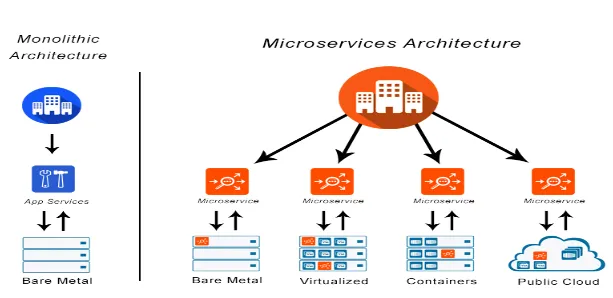

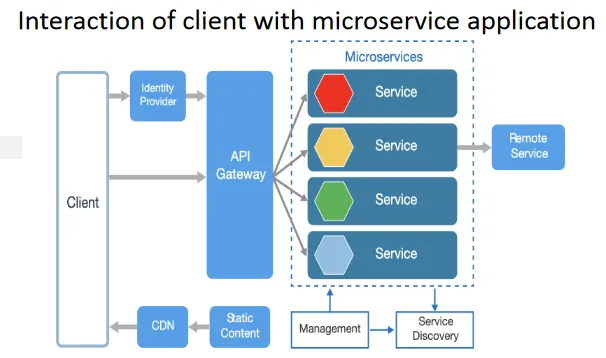

Microservices

- Benefits

- Rapid development

- DEV/OPS

- Scalability

- Heterogeneous environments

- Monolithic Vs Modern Approach

| Monolithic Architecture | Microservices Architecture | |

| Structure | Single, tightly coupled unit | Multiple, loosely coupled services |

| Scalability | Difficult to scale | Easy to scale horizontally and vertically |

| Flexibility | Difficult to change | Easy to change and update individual services |

| Resilience | A single point of failure | More resilient to failure, as one service failing does not necessarily impact the entire system |

| Complexity | Simpler to develop and deploy | More complex to develop and deploy, but easier to maintain and evolve |

-

Distributed Software Architecture(MVC)

- distributed system is one in which the computing power and software is distributed across several servers, connected through a network, communicating and coordinating their actions by passing messages to each other.

-

Microservices Architecture?

An SOA variation, microservice architecture is an approach to developing a single application as a suite of small services. Each service:

An SOA variation, microservice architecture is an approach to developing a single application as a suite of small services. Each service:- Runs its own processes

- Can be written in any programming language and is platform agnostic

- Is independently deployable and replaceable

- May use different storage technologies

- Requires a minimum of centralized management

- Large companies, such as Amazon, eBay, and Netflix, have already adopted microservice

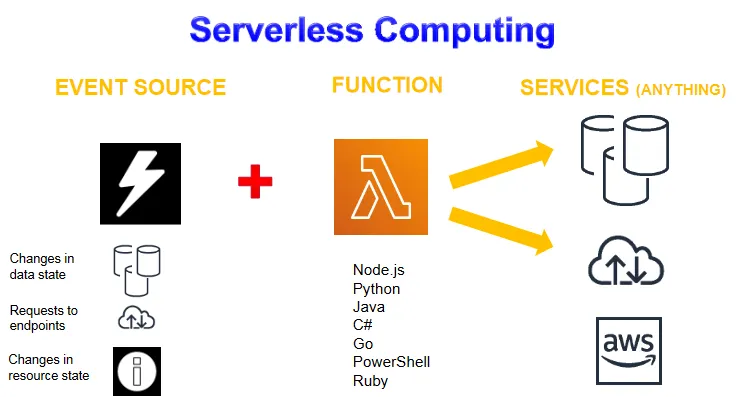

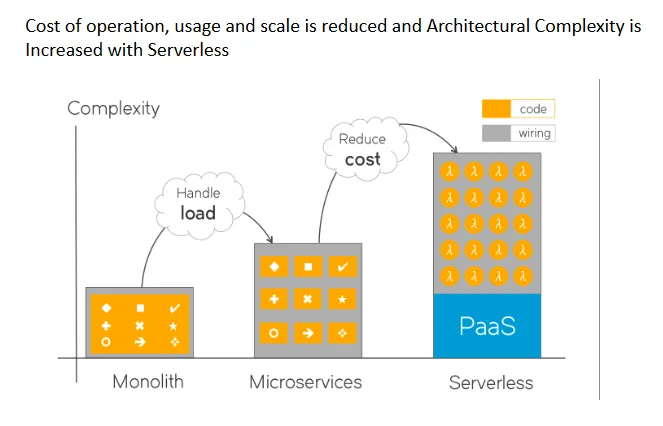

Serverless Computing

-

Terminology

The term Serverless computing is a misnomer as the technology uses servers, only they’re maintained by the provider and only when needed. Serverless computing is considered a new generation of PaaS, as in this case, the cloud service provider takes care of receiving client requests and responding to them, monitoring operations, scheduling tasks, and planning capacity. Developers have no need to think about server infrastructure or containers issues and can entirely concentrate on writing software code. In serverless computing the resources are assigned to usesr just in the time of their need

-

Benefits

- No Servers or containers to Manage

- Automatic Continuous Scaling

- Dynamic allocation of resources

- Never Pay for Idle: pay-per-usage

- Highly Availabel Secure

-

Diagram

- Microservices Architecture

- Smaller-grained service

- Specified Functions

- Defined Capabilities

- Complexity Increase

- FASS

- Serverless computing is also sometimes called Function as a Service or event-based programming, as it uses functions as the deployment unit.

- The event-driven approach means that no resources are used when no functions are executed or an application doesn’t run.

- It also means that developers don’t have to pay for idle time

- Definition FaaS simplifies deploying applications to the cloud. With Serverless computing, you install a piece of business logic, a “function,” on a cloud platform. The platform executes the function on demand. So you can run backend code without provisioning or maintaining servers. The cloud platform makes the function available and manages resource allocation for you. If the system needs to accommodate 100 simultaneous requests, it allocates 100 (or more) copies of your service. If demand drops, it destroys the unneeded ones. You pay for the resources your functions use, and only when your functions need them

- To create an application, developers should consider how to break it into smaller functions to reach a granular level for Serverless compatibility.

- Each function needs to be written in one of the programming languages supported by the Serverless provider.

- Each function is associated with an event that trigger its execution

- Serverless computing is also sometimes called Function as a Service or event-based programming, as it uses functions as the deployment unit.

- In order to initialize an instance of a function in response to an event, aFaaS platform requires some time for a cold start It may be caused by the programming language, the number of libraries

you use, the configuration of the function environment, and so on.